This is a blog post version of the talk I recently gave at AWS User Group Ljubljana, the slides of which are here.

The Problem

At work we built a docker image for our production ML workload. At 2700 MB, it was… let’s say “substantial.” The image contained everything we needed: ML models, multiple language runtimes, and all the application assets required to run our service.

The challenge? We needed to deploy this to AWS Fargate, and startup time was 55 seconds. For context, AWS Fargate is a serverless container service – you get docker containers without having to manage servers, handle scaling, or deal with infrastructure. But 55 seconds from “run task” to “container ready” felt painfully slow.

Could we make it faster?

Initial Ideas

I started with the usual suspects for docker image optimization:

Build-time optimizations:

- Remove unnecessary files (caches, node_modules, etc.)

- Use multistage builds

- Switch to smaller base images (alpine instead of ubuntu)

- Organize layers to maximize cache hits (not actually relevant since Fargate doesn’t use cache)

And then thought of some unusual suspects for docker image optimization:

Post-build optimizations:

- Deduplicate files by turning copies into links

- Use zstd compression instead of gzip

- Reorder files within layer tar files

- Redistribute files across layers

In this post I’ll dive into the last idea. I also tried deduplicating files which helped quite a bit, and also reordering files which saved about 1-2% of the compressed docker image size.

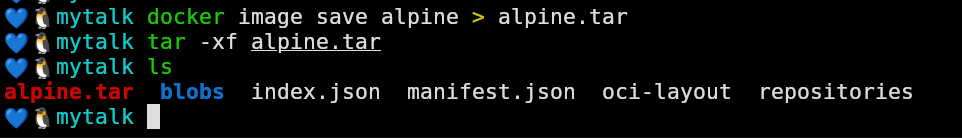

Docker Images Are Just Tars in Tars

Here’s what you see if you extract a docker image file:

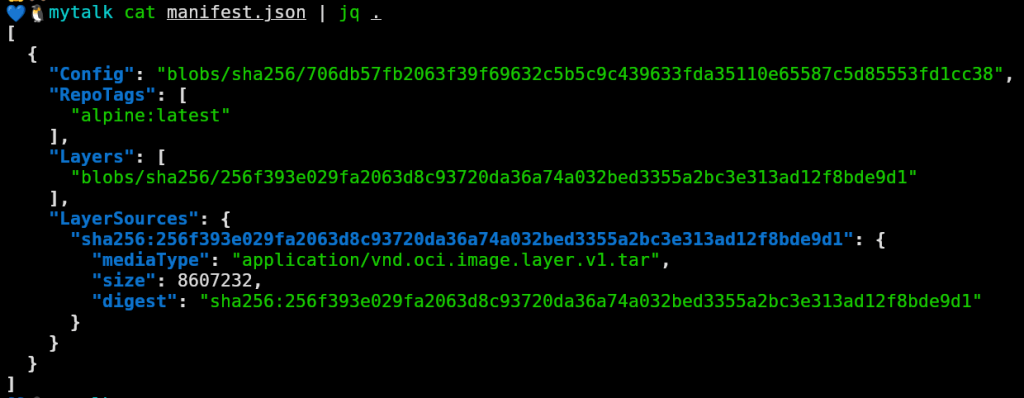

The blobs directory has tar files of layers, and their details and order are specified in manifest.json:

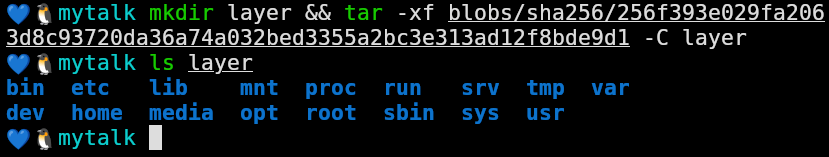

Extracting the tar file of a layer shows the actual files we’d see had we run a container with the image. For the alpine image I got above there is a single layer, and we can see it’s files like this:

The key insight: we can distribute our image files across layers however we want. Docker images are just tar files containing other tar files. The manifest.json file points to each layer’s tar, and those layers stack on top of each other to form the final filesystem.

This meant layer redistribution wasn’t just possible – it might be powerful if we understood how Fargate downloads and extracts images.

How Does Fargate Get Images?

I found a blog post of AWS where they say that Fargate uses containerd under the hood.

So containerd is the container runtime that powers Fargate (it identifies itself as containerd/2.0.5+unknown). Reading through the code revealed the download process:

- Layers download in parallel – all layers start downloading simultaneously

- Layers extract in sequence – but they must extract in order, one at a time

This creates an interesting optimization problem. If we have three layers:

- All three start downloading at once (sharing bandwidth)

- Layer 1 extracts as soon as it finishes downloading

- Layer 2 extracts as soon as BOTH: it finished downloading AND layer 1 finished extracting

- Layer 3 extracts as soon as BOTH: it finished downloading AND layer 2 finished extracting

The total time is determined by the interleaving of these parallel downloads and sequential extractions.

Measuring Download Speed: Build Your Own Registry

To optimize layer sizes, I needed to know the actual download and extraction speeds. Time for an experiment.

I cloned the open-source docker registry (distribution/distribution) and asked Claude Code to add logging for layer download times. Then I:

- Ran the custom registry on EC2

- Pushed our image to it

- Created a Fargate task using this registry

- Analyzed the logs

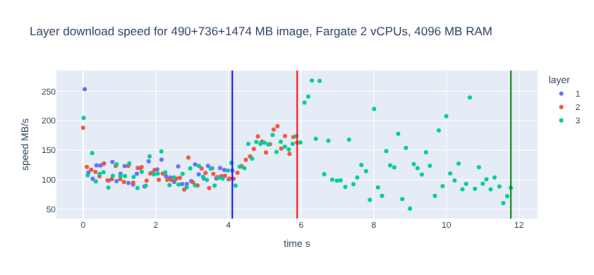

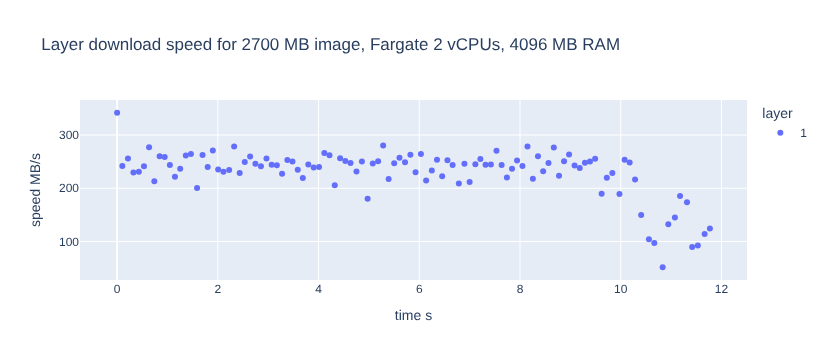

The result? Download speed averaged ~225 MB/s.

This is the speed I always got when using 2 vCPUs and 4096 MB RAM.

Here is a plot of the average speed when using a single layer for the whole image:

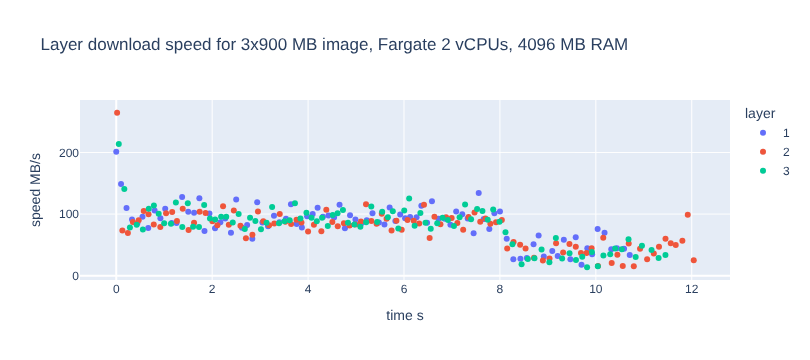

And here is a plot of the average speed when using three equally sized layers for the same image:

We shouldn’t expect any difference in the total time, since both of these download the same amount of data throughout the download. We do see something weird where the initial download speed is higher than the end, and this effect is very pronounced in the 3-layered image pull.

We can’t see extract times, but we’ll assume the speed is similar to the download speed.

Optimal Layer Sizes via Linear Algebra

Now for the fun part. Given:

- Total image size: 2700 MB

- Download speed: D = 225 MB/s (shared across active downloads)

- Extraction speed: E = 225 MB/s

What layer sizes minimize total time?

I set up a system of linear equations. Let’s say we have three layers with sizes L1, L2, L3:

import numpy as npD = E = 225 # MB/sA = [ [1, 1, 1], # sum to 2700 [1/E + 1/(D/2), -1/(D/2), 0], # layer 1 extraction ends = layer 2 download ends [0, 1/E + 1/D, -1/D] # layer 2 extraction ends = layer 3 download ends]x = [2700, 0, 0]layer_sizes = np.linalg.solve(A, x)The math works by ensuring that extraction and download are perfectly pipelined – layer 2 finishes downloading exactly when layer 1 finishes extracting, and so on.

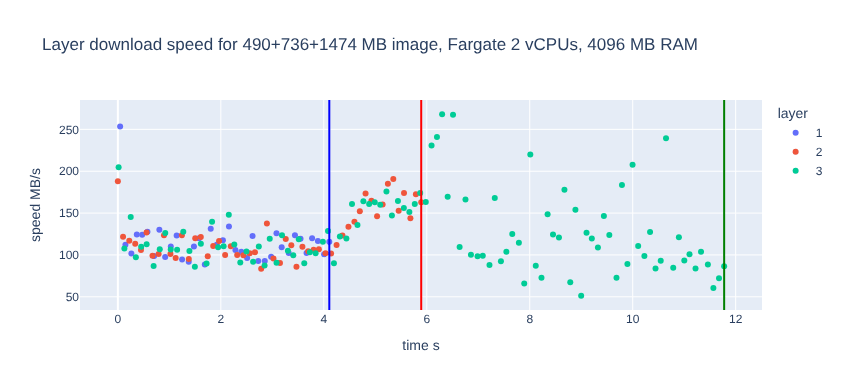

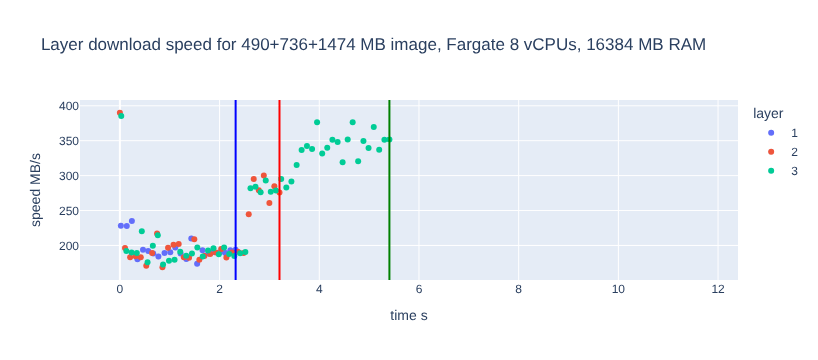

I rebuilt the image with the optimal layer sizes: 490 MB, 736 MB, 1474 MB.

Here is a plot of the layer download speeds:

Layer 1 finishes way sooner, and then layer 2, and at the end layer 3 – this is going to work!

The Result

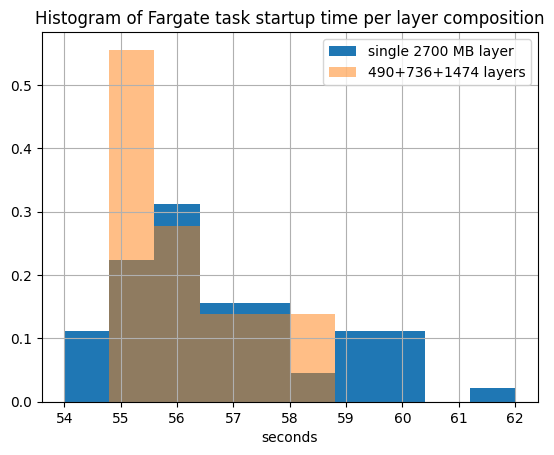

Total startup time: 55 seconds.

No improvement!

Here is a plot comparing the histograms of startup times when using a single layer or the 3 optimized layers, showing that optimized layers do not really make a difference:

“Maybe it’s a CPU bottleneck?” I thought. Let me try with more vCPUs – blogs suggested Fargate’s network gets faster with more CPU allocation. I spun up a task with 8 vCPUs and 16384 MB of memory.

Here is a plot of the average download speed:

Oh wow – layers finish downloading a lot sooner!

Total startup time: Still 55 seconds.

Hypothesis of real bootleneck – IOPS limits

My current hypothesis is that the download and extraction of the image happen on remote storage, subject to IOPS limits. It doesn’t matter how we redistribute our files – they need to be downloaded and then extracted on this remote storage system, leading to the same startup time given the same files.

Conclusion

I can’t speed up Fargate startup times – at least not through layer optimization.

But not on Fargate – layer redistribution works great. When testing the above on ECS with EC2, we see the expected great speedups.

For these experiments and also actual use at work, I made a tool that takes a docker image and creates a new one with the same end file system, but with specified layers sizes. It deserves a full post on its own, as well as some polishing first, so stay tuned.

I’m planning to post separately about deduplicating files within images to decrease image size, and also on redistributing layers of all images across many repositories so that there is optimal layer caching. tl;dr my algorithm turns 20 top repos on docker hub from 27 TB total down to 20 TB, just by reshuflling files and layers post build.

Have you tried optimizing Fargate startup times? Found any techniques that work? I’d love to hear about them.